About

Mainstream media publishers pride themselves on verifying the factual accuracy of their stories and–although they sometimes fail to live up to their own standards–what they publish is generally true in the technical sense that it would pass a fact check.

As media critics have noted, however, it is trivially easy to mislead a reader without saying anything that is outright false. For example, emphasizing certain facts while omitting or downplaying others can cause a reader to come away with a false impression of the evidence even if all the facts are accurate. Similarly, prioritizing the voices of certain actors or points of view over others, or simply covering some stories extensively while ignoring or only lightly covering others can give readers an inaccurate or even false impression of the world.

At the Penn Media Accountability Project (PennMAP) it is our contention that bias–which we define as the preferential selection of some stories, facts, people, events, or points of view over others–is an even bigger problem than the sort of outright lies that misinformation researchers have traditionally worried about. Although we agree that lies are indeed a problem, we believe that merely biased information is far more pervasive. Moreover, because biased information is often true in a narrow, technical sense, it is harder to debunk than demonstrable falsehoods.

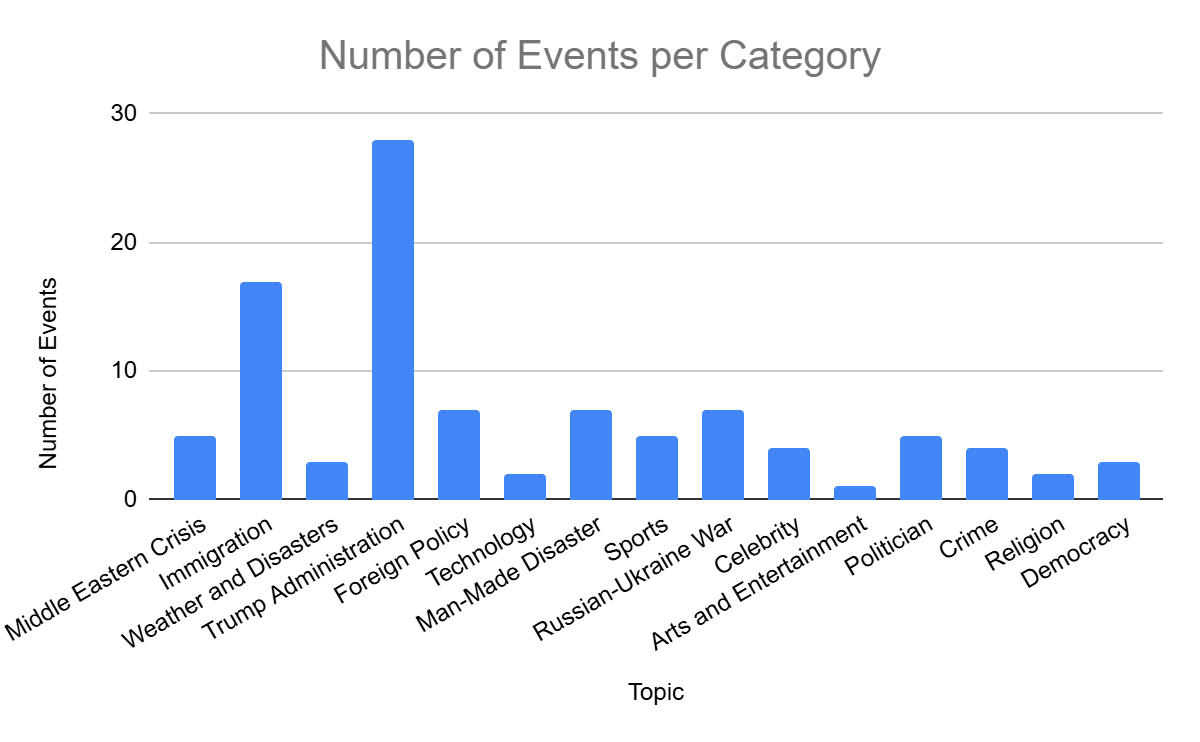

To measure and expose bias in the mainstream media, PennMAP is proud to launch the Media Bias Detector, which tracks and classifies the top stories published by a collection of prominent publishers spanning the political spectrum in close to real time.

Leveraging a combination of AI, machine learning, and human raters, the Media Bias Detector tracks the topics and events that each publisher covers as well as the facts they cite and the overall tone and political lean of their coverage. As a result, we can measure bias at the level of articles, in contrast with existing services which measure bias at the level of publishers. The difference is important, as individual publishers typically publish hundreds of articles per day and individual articles can vary widely in tone and lean depending on the topic, the author, and other factors. We also conduct weekly national polls to track where Americans get their news and which topics they are aware of (see here for details on the data and methods). Over time we will add more publishers and more measures of bias.

What we will not do is attempt to divine the “truth.” Perhaps more surprisingly, we also don’t try to rank publications on how biased they are. Although, like anyone, we have opinions about which stories are the most important, which facts should be emphasized, and how they should be interpreted, we recognize that our opinions are biased also. No news organization can or should report every single thing happening in the world, nor can they relate every conceivable fact or opinion about what they do report. Some manner of curation is necessary in order to report the news and thus some form of bias is inevitable. For the same reason, judgements about bias are also inevitably biased. It is not our intention to sort out this philosophical tangle, nor is it clear that it can be sorted out.

Nonetheless, we believe bias is important to measure and expose, for two reasons.

First, news organizations do often present themselves as neutral and objective observers of reality who are simply reporting “the truth” or “the facts.” Exposing the bias inherent in media coverage destroys the illusion of objectivity, allowing consumers to better contextualize the information they glean from their preferred sources.

Second, when consumers get most of their news content from a single source or from a collection of ideologically aligned sources, systematic biases across these “echo chambers” can lead to the rise of “separate realities,” wherein people cannot agree on basic facts or priorities. By identifying which stories or publishers share the same bias, the potential for separate realities can be quantified and countered.

Our hope is that the Media Bias Detector will allow researchers, journalists, advocates, and everyday consumers to better understand the choices that media publications are making every day. Do they choose to write more articles about Joe Biden’s age or Donald Trump’s? Do they choose to cover the election as an entertaining horse race or as a profoundly consequential bifurcation between alternative futures? Do they choose to cover the economy by focusing on inflation or wage increases? Do they choose to cover crime from the perspective of law enforcement or economic impact or the effects of incarceration? Finally, on the other side, what are typical Americans absorbing from the news?

The Media Bias Detector will shed light on these and many other questions. We encourage you to explore our data and to draw your own conclusions. We also encourage you to check out our blog, where we will showcase some of our own analysis using Bias Detector data.

Team

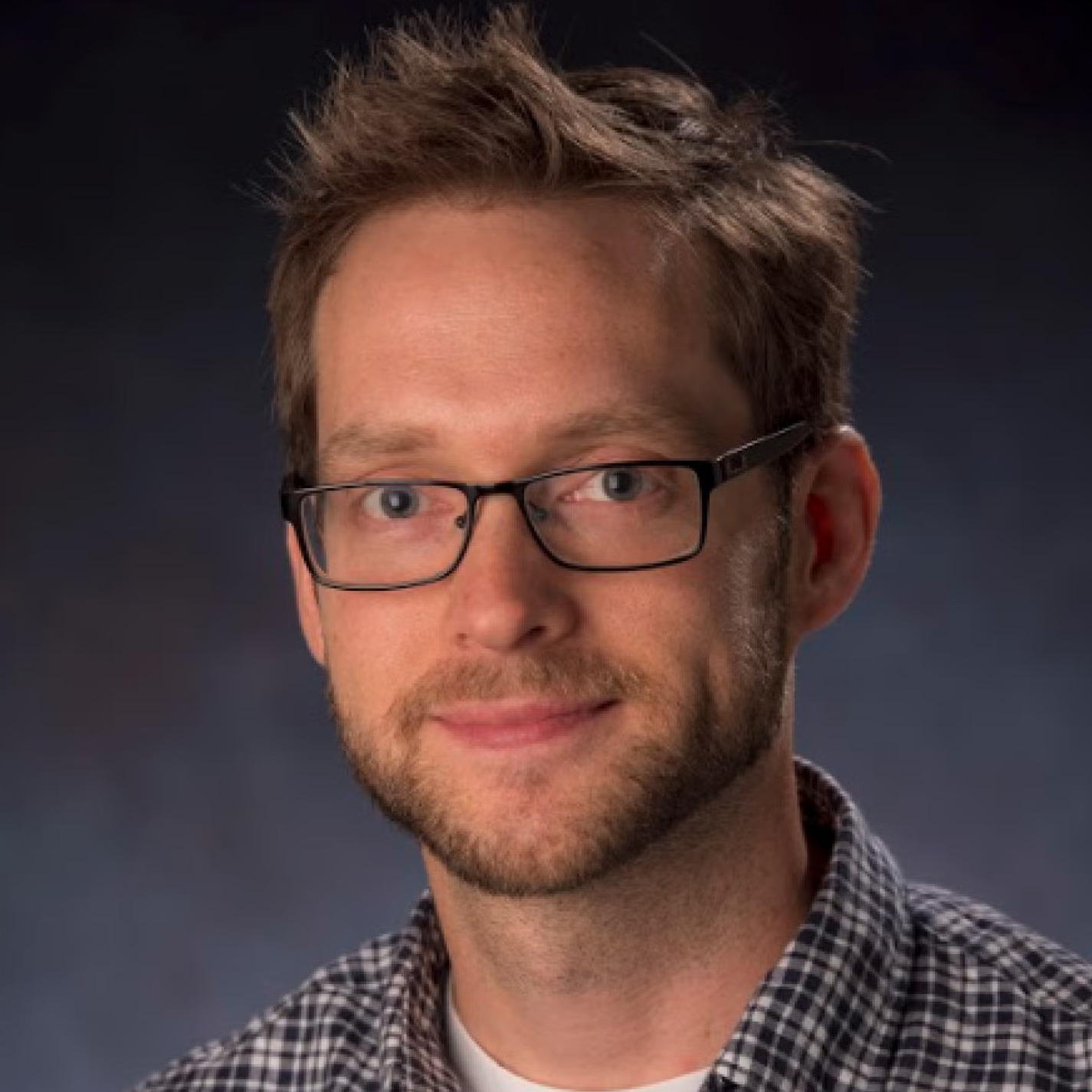

Duncan Watts

CSSLab Director

Jeanne Ruane

CSSLab Managing Director

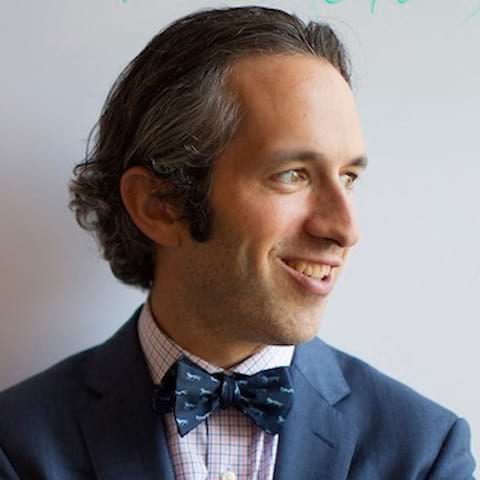

David Rothschild

Economist Microsoft Research

Anushkaa Gupta

Project Manager

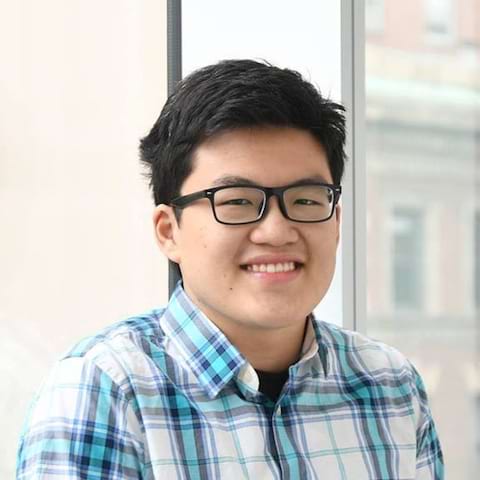

Yuxuan Zhang

Data Scientist

Jenny Wang

Pre-Doctoral Researcher at Microsoft Research

Amir Tohidi

Post-Doctoral Researcher

Samar Haider

Ph.D. student in Computer and Information Science

Calvin Isch

Ph.D. student in Communications

Timothy Dorr

Ph.D. student in Communications

Neil Fasching

Ph.D. student in Communications

Chris Callison-Burch

Associate Professor in Computer and Information Science

Bryan Li

Ph.D. student in Computer and Information Science

Ajay Patel

Ph.D. student in Computer and Information Science

Elliot Pickens

Ph.D student in Computer Science

Delphine Gardiner

Senior Communications Specialist

Upasana Dutta

Ph.D. student in Computer and Information Science

Research Assistants

Eric Huang, Liancheng Gong, Gina Yan Chen, Jean Park, Carol Tu, Emily Duan, and Palashi Singhal